Matrices as Linear Maps

Many of us have encountered with matrices. People having worked on data know that they represent essentially data itself, whereas for many of us, definition of matrices dates way back to our high school classes. We all heard about the multiplication rule that goes as follow:

- Iterate through rows of the first matrix.

- Iterate through the column of the second matrix.

- Multipy row of the first matrix and the column of the second matrix.

- Iterate through the column of the second matrix.

In this post, I want to show you that there is more to what matrices represent and essentially how they work as linear maps. Enjoy reading and see you at the finish line!

Functions¶

In order to understand matrices and how they represent linear transformation on inputs, we first need to understand how functions work and what they represent from a broader perspective.

A way to understand and visualize functions is to draw venn diagram. Here each of the function takes a value from domain and outputs a value in codomain. They represent a mapping between spaces.

Another way to understand functions is to show a table where columns represent input and output.

| x | f(x) | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 3 | ||||||||||||

| 2 | 6 | ||||||||||||

| 3 | 9 |

This is a good representation in order to understand the affect of the function on the inputs. We can also create a simpler form of the function so that it is easier for us to use it in combination with other functions or inputs in general.

$ f(x) = 3x $. This representation is powerful in a sense that we can use it in combination with other functions.

Combination of Functions¶

Assume that we have a function $ h(x)=5x $ and we want to somehow apply the function $f(x)$, get the output and apply function $h(x)$ and get the result. Let's understand this mapping and observe the affect of this combination of functions on different values!

| x | f(x) | h(x) | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 3 | 15 | ||||||||||||||||

| 2 | 6 | 30 | ||||||||||||||||

| 3 | 9 | 45 |

We can describe combination of functions for any arbitrary input. Right representation allows to understand the affect of the function on inputs and use it with other functions.

Linear Maps¶

Let's now focus on specific type of functions: Linear Maps. Without going into too much detail, these type of functions have very useful properties that preserve the operations applied on them:

Operation of Addition

$ f(x+y)=f(x)+f(y)$

Operation of Scalar Multiplication

$ f(c*x)=c*f(x)$

A linear map is operation preserving. It doesn't matter if linear map is applied before the operation or after the operation on the inputs. That is we will get the same result by applying function $f(x)$ on $x+y$ or $f(x)$ applied on $x$ and $y$ individually then adding them. Assume once again that $f(x)=3x$ then the following holds:

$f(x+y)=3(x+y)=3x+3y=3f(x)+3f(y)$

$f(3x)=3(3x)=3*3(x)=3*f(x)$

Okay, we now know that linear mappings have some nice properties but what is so special about them? Mathematicians discovered that these transformations can model a lot of the phenomenon in statistics, physics and machine learning space. Especially rotations and scalings are linear maps that transform data points either by scaling or rotating around a point and this is very powerful. Dimensionality reduction is an area of machine learning where you see linear mappings applied quite often. Change of basis is another concept that heavily uses linear transformations.

- Change of Basis

Functions that maps input vectors from one vector space to another

Don't worry if you don't recognize these terms, the important thing to remember here is that functions act as linear maps that transform data points in N dimensional spaces. Keep reading to see how matrices can do that...

import sys

sys.path.append('../scripts/')

from plot_helper import *

How to Represent Linear Maps¶

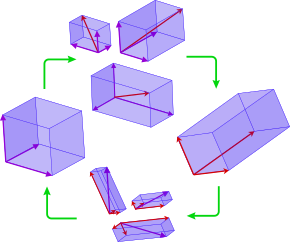

Now we grasp linear maps and how these functions can transform shapes, we will see how we can represent them.

1D¶

We already see that we can represent linear maps as functions that map inputs to outputs while preserving addition and multiplication operations. Assuming $f(x)=3x$, we know that this function scales points in 1D.

2D¶

I will start to differentiate between notations, where $x$ represent 1D point and $\mathbf{x}$ represent N dimensional vector of points. Now the question becomes how can we scale $\color{green}{\begin{bmatrix}1\\0\end{bmatrix}}$ or $\color{red}{\begin{bmatrix}0\\1\end{bmatrix}}$? Let's define a linear map $f(\mathbf{x})$ that stretches a vector's width by 2 and height by 3 and see how it transforms standard unit vectors. Let's visualize the unit vectors first.

input_vector = [numpy.array([1,0]),numpy.array([0,1])]

plot_vector(input_vector)

We want to know what happens to standard vectors $\color{green}{\begin{bmatrix}1\\0\end{bmatrix}}$ , $\color{red}{\begin{bmatrix}0\\1\end{bmatrix}}$

$$ f(\color{green}{\begin{bmatrix}1\\0\end{bmatrix}}) = \begin{bmatrix}2\\0\end{bmatrix} \\ f(\color{red}{\begin{bmatrix}0\\1\end{bmatrix}}) = \begin{bmatrix}0\\3\end{bmatrix}$$A = numpy.array([[2,0], [0,3]])

plot_linear_transformation(A)

As you can see in the graph above, we just transformed our standard vectors. Can we transform new vector $\begin{bmatrix}3\\4\end{bmatrix}$ based on how we transform standard unit vectors? The answer is yes!

We can clearly express the vector as a combination of standard unit vectors:

$$\begin{bmatrix}\color{green}3\\\color{red}4\end{bmatrix} = 3\begin{bmatrix}\color{green}1\\0\end{bmatrix} + 4\begin{bmatrix}0\\\color{red}1\end{bmatrix}$$We also know by now that linear maps are operation preserving and we know the affect of the transformation on standard unit vectors. Then

$$ f(\begin{bmatrix}\color{green}3\\\color{red}4\end{bmatrix}) = f(\begin{bmatrix}\color{green}3\\0\end{bmatrix}) + f(\begin{bmatrix}0\\\color{red}4\end{bmatrix})\\=3f(\color{green}{\begin{bmatrix}1\\0\end{bmatrix}}) + 4f(\color{red}{\begin{bmatrix}0\\1\end{bmatrix}})\\=3\color{green}{\begin{bmatrix}2\\0\end{bmatrix}}+4\color{red}{\begin{bmatrix}0\\3\end{bmatrix}}\\=\color{green}{\begin{bmatrix}6\\0\end{bmatrix}}+\color{red}{\begin{bmatrix}0\\12\end{bmatrix}}\\={\begin{bmatrix}6\\12\end{bmatrix}}$$Great! We were able to get transformation on $\begin{bmatrix}\color{green}3\\\color{red}4\end{bmatrix}$ by knowing transformations on $f(\color{green}{\begin{bmatrix}1\\0\end{bmatrix}})$ and $f(\color{red}{\begin{bmatrix}0\\1\end{bmatrix}})$.

Matrices¶

How can we represent the linear map $f(\mathbf{x})$ on vector inputs? Is there an easy way to represent the transformation so that we can apply this transformation in combination with others?

The answer is matrices. Now we can see connnection between linear maps and matrices. Let $\mathbf{A}$ represent this transformation, then we can get the transformation by transformed standard unit vectors:

$$\mathbf{A}={\begin{bmatrix}f(\color{green}{\begin{bmatrix}1\\0\end{bmatrix}}) f(\color{red}{\begin{bmatrix}0\\1\end{bmatrix}})\end{bmatrix}}\\={\begin{bmatrix}\color{green}2&\color{red}0\\\color{green}0&\color{red}3\end{bmatrix}}$$We can now apply this transformation to any vector input and get the transformed vector. Just like functions $f(x)$, we now get a linear map $f(\mathbf{x})$ which given input transforms and outputs the result. Did you recognize the following formula $\mathbf{\hat{y}}=\mathbf{X}\boldsymbol{\theta}$? This is formula of linear regression and now you can observe that the right handside of formula ${\boldsymbol{\theta}}$ is transformed by ${\mathbf{X}}$.

Transformation¶

Let's apply transformation matrix $\mathbf{A}={\begin{bmatrix}2&0\\0&3\end{bmatrix}}$ to vector $\mathbf{x}=\begin{bmatrix}3\\4\end{bmatrix}$ and see the result.

$$\mathbf{A}\mathbf{x}=\begin{bmatrix}(2x3)+(0x4)\\(0x3)+(3x4)\end{bmatrix}=\begin{bmatrix}6\\12\end{bmatrix}$$We see that the result is the same result we get earlier. We are now convinced that the transformation matrices when multiplied with a vector $\mathbf{x}$ transform the vector and output another vector, just like functions.

We can also see transformation visually!

import numpy as np

A = np.array([[2,0],[0,3]])

new_vector = A.dot(np.array([3,4]))

plot_vector([new_vector])

Interactive Plot¶

Now that we know linear maps and how they perform transformations, let's have some interactive experience with various transformation matrices. Play with the interactive plot below to see transformation in action!

$$\mathbf{A}=\begin{bmatrix}x_o&y_o\\x_1&y_1\end{bmatrix}$$Wrap up¶

In this post, we saw that matrices are just functions that perform some transformation on their inputs. Understanding how matrices transform input vectors is key to many ideas in machine learning! With that, we have reached the end of this post, we have covered a lot and I really enjoyed going over a few important concepts with you. I hope that you enjoy the post as well :) If you have any questions about the post or data science in general, you can find me on Linkedin. I would highly appreciate to get any comment, question or just to have a chat about data science and topics around it with you! See you at the next one...